Concerns over the use of Artificial Intelligence (AI) in education has led to the rollout of John Jay’s AI Responsible Use Guidelines. These guidelines were released on May 1, 2024 and it is expected that they will be continuously updated as needed.

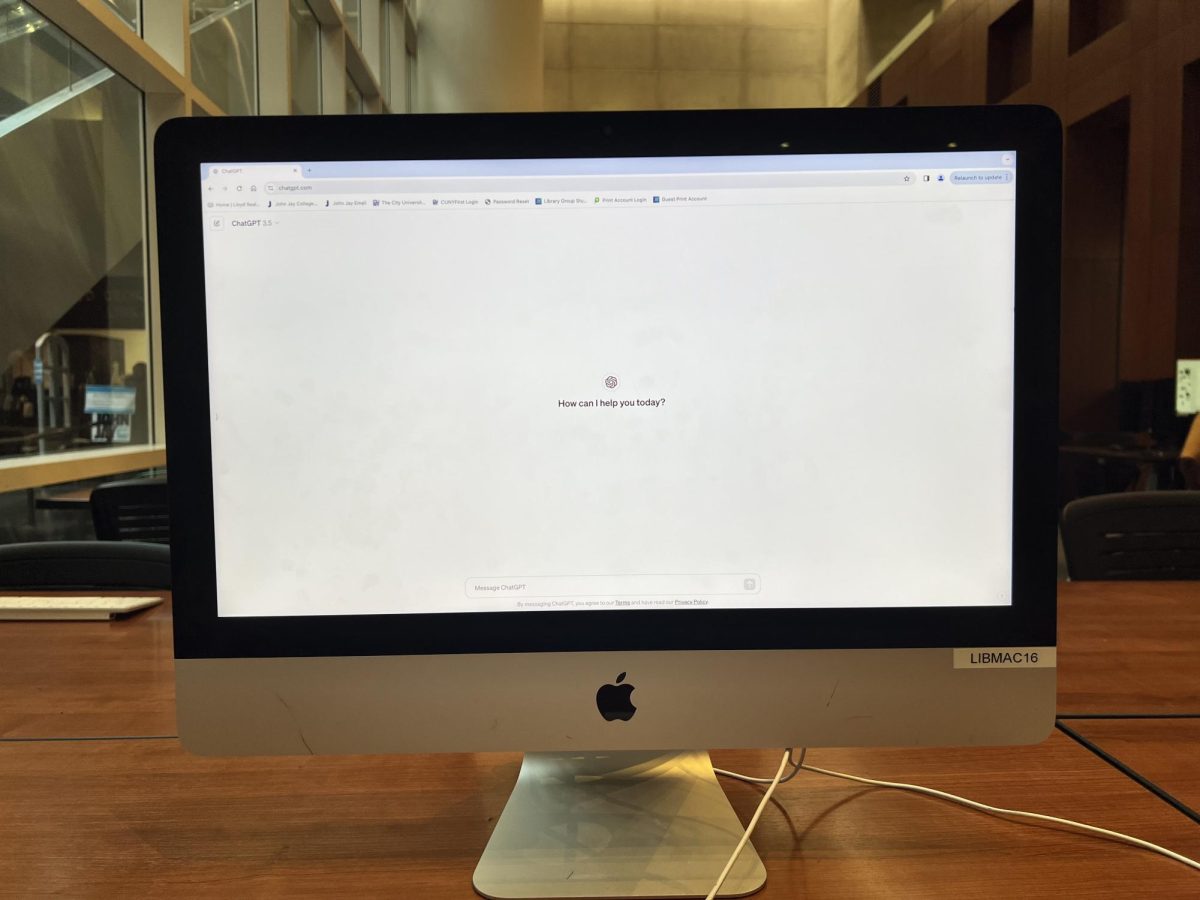

The topic of AI exploded into the mainstream media with the release of ChatGPT, which is a free to use AI chatbot and virtual assistant. ChatGPT is based on generative artificial intelligence and large language models that are used to predict and generate responses based on the prompts that users enter into the software.

Since its development, students began using AI in educational settings.

While some students and professors are eager to uncover AI’s capabilities, others are apprehensive about students using AI in the classroom.

CUNY’s Academic Integrity Policy has been updated to reflect students’ use of AI in the classroom.

Provost Allison Pease assembled a committee of students, faculty, and staff to create John Jay’s AI Responsible Use Guidelines.

The guidelines provide information for both students and faculty members on how to use AI in an ethical manner. These guidelines also address numerous key issues regarding AI use in classrooms: data privacy, prompt literacy, bias, crediting AI, and AI detection software.

Dr. Penny Geyer, a professor in the Law, Police Science, & Criminal Justice department, shared her thoughts on the committee.

“I think that was really helpful because I think it took a lot of individuals that are working here and have varying opinions, from fully supporting AI to not supporting it,” she said. “I think that was the best thing that they [John Jay’s Administration] did because it [the new guidelines] brought a whole bunch of minds together to come up with something that isn’t strict and narrow,” said Geyer.

Geyer has also noticed a significant increase of AI in her students’ work. And although Geyer thinks that having an open dialogue and discussion with students is key to preventing cheating, she still voiced her concerns about how it is used.

“It’s when it becomes too much of a crutch and it gets so heavily leaned on that, I think sometimes the creativity and the actual knowledge that one might have almost gets kind of suppressed because it gets so reliant on, ‘Oh, I can just do this,’” said Geyer.

Jennifer Dobbins, an academic integrity officer and one of the members of the AI committee, has been reviewing the influx of reports related to the unethical use of AI.

“I can certainly tell you that last fall was a nightmare. The reports were flying [in], and it was a hot mess,” she said. “Most of it had to do with things like ChatGPT,” said Dobbins.

One of the biggest fears that Dobbins has heard from instructors is that students won’t be learning anymore.

Although the technology is fairly new, she asserts that plagiarism is plagiarism.

But while the process of reviewing the academic integrity reports is quite similar to how the office has reviewed them in the past, Dobbins also acknowledged how AI is changing the way others think about academic integrity.

“I don’t want to demonize this idea of artificial intelligence as a potential learning tool,” she said. “I’m saying that the conversations that it’s kicking up is causing us as a college to question everything we think, including what we think constitutes academic integrity,” she said.

Olivia Fratangelo, a John Jay student majoring in computer science and philosophy, was also a part of the AI committee.

In her role on the committee, Fratangelo primarily worked on the data privacy portion of the guidelines. She emphasized the importance of students not putting sensitive information into AI chatbots because that data is not private.

Fratangelo also pointed out that student data is protected by the Family Educational Rights and Privacy Act (FERPA).

“Professors and any faculty should not be sharing that student data on an AI database because then it gets built into that database and it could regurgitate that information back out at any time,” said Fratangelo.

Dr. Hunter Johnson, a professor of mathematics and computer science, worked on the AI guidelines as part of the committee as well. Unlike others at John Jay, he has embraced the use of AI in the classroom.

Johnson specifically teaches cryptography and machine learning and has incorporated AI into his classes. Johnson encourages students to use AI and ChatGPT for assignments and projects, noting that students do not exploit its full capabilities.

“I find when students are using it often, they write very minimally,” he said. “I guess, maybe because they’re used to Googling or something. Instead of giving ChatGPT an outline of the solution. They kind of rely on it to do more thinking than it’s really capable of doing,” he said.

Johnson further elaborated on how the capabilities of AI are helpful in research.

“I have two PRISM students and we work on exotic topics,” he said. “You know, that’s what research is, sort of unexplained areas, so sometimes we want to know something about a really obscure piece of software,” he said.

As AI becomes increasingly integrated into the lives of students, the John Jay community will continue to adapt to it being used in the classroom.

“We have to be teaching people and giving them assignments to help them think, not just regurgitate information because that’s all ChatGPT is doing,” said Fratangelo.